Update 16 Sep 2024: JSONQuery 2.0 is now available.

Continuation of interview with Steve Senft-Herrera

[Editor’s note: the demo file and custom function have been significantly updated since part 1.]

Demo file: CF_JSONQuery_20211130_0120_PUBLIC.fmp12.zip

KF: Welcome back Steve for part 2 of our JSONQuery conversation.

SSH: Thank you, Kevin.

KF: One thing we didn’t mention last time, because they were late-breaking additions, were the inequality operators.

SSH: Yes. Well, as background for some of these topics: The original version of JSONQuery was built on a philosophy that said: “Let’s only do that which we can do remarkably fast.”

KF: And then reality intruded…

SSH: Ha! Well, whether the reality of scope creep, or the reality that not everything can be remarkably fast, I still held onto that idea and only allowed for matching rules which, perhaps better stated, had the potential to be remarkably fast.

And so the original version was limited to variations on equality matching.

Once the new version was in the works, and we started fleshing out the aggregate functions, it became clear to me that I had abandoned that original philosophy or guideline, and instead things had evolved to a guideline that said:

“Let’s offer what we can make intuitive, and still keep an eye out for performance, but let’s not block adding a feature just because it won’t be a speed demon.”

KF: I’m so glad you were willing to compromise on that point.

SSH: Well, I’m not sure if it was a compromise, so much as an evolution of sentiment. Because, once I was adding in the next round of features, I didn’t really feel any regret.

But, this new guideline is what made it ok to start considering features such as: <, >, >=, <=, etc..

KF: And you introduce those operators here.

KF: And they get a good workout here as well.

SSH: Indeed! And, I should mention, the inclusion of date interpretation is also thanks to this newer feature guideline that I mentioned.

KF: And we should talk about two more new features, right?

SSH: Yes — Along with the inequality operators, there is the addition of a few pattern-based match operators, as well as a new kind of equality operator that improves the ability to match numeric values.

KF: Okay, I see we have NUMERIC_EQUALS in examples 10-12.

SSH: Yes — the motivation for adding NUMERIC_EQUALS was to handle cases where the JSON includes numeric data that has been stored in the JSON as strings. As you know, this is not particularly uncommon.

KF: Au contraire mon frere. Exceedingly common.

SSH: I got to thinking about this, and I realized that regular string matching would fail us in cases where, say for example, a value had been stored as “150.00”.

Or better stated, I guess I should say that the string matching would fail unless the dev using JSONQuery realized that they would need to match with a string value of “150.00”, and not a numeric value of 150.

I didn’t like having that potential for either confusion, or simply that JSONQuery might not be able to help out in a case which is so similar to where it does function well.

KF: Makes sense.

SSH: After thinking about this for a while, I decided that what I would want to have (as a user of JSONQuery) is the ability to match against JSONString nodes, but to be able to tell JSONQuery that it should treat those string values as numbers, i.e., that before performing a comparison to my target value, that it should wrap the value from the JSON in GetAsNumber, so as to be sure that the comparison operates as a numeric comparison. And thus was born NUMERIC_EQUALS.

KF: So you can match on string, number or both.

SSH: Yes — matching strictly on number values is actually no different than just using the regular EQUALS match operator, simply because the JSONNumber values don’t have the ambiguity that a string value would have.

KF: Very nice. You’ve anticipated and remedied what could have been a real challenge.

SSH: But, just for the sake of having some coherence, JSONQuery allows the dev to specify that the target value type is JSONString, JSONNumber, or either of these two. I did my best — to be honest, I can see some room for improvement on this concept, but, I do think it is a good step in a useful direction. If we take a look at Example #10, we can see this in action:

SSH: Various values are stored under the “a” key in the objects in the array. If we were to try to match them as strings, we would not be able to grab all values which represent the value of 12000.

KF: Because you left the value type empty you’re matching on both text and numeric values.

SSH: Yes. This means that we’ll not only get values such as “12000”, but we’ll also get 12000 (without the quotes).

KF: And then in example 11 you show just string matches, and in example 12 you show just number matches.

SSH: So, in a nutshell, the point of the NUMERIC_EQUALS operator was a way to try to bring the power of the EQUALS match operator to cases where the JSON has stored numeric values as JSONStrings.

KF: I’m intrigued by these new Pattern Operators.

SSH: How about if we take a quick look at BEGINS_WITH and ENDS_WITH, and then we can move on to CONTAINS?

KF: Sure. Let’s take a look at example 13.

SSH: BEGINS_WITH is nothing new. It is actually a “renaming” of the LIKE operator. And it functions as LIKE always has: It matches values which start with (begin with) a supplied substring value.

Because I was going to be introducing an ENDS_WITH operator, I thought it might be more intuitive to standardize the naming a bit. Hence, ENDS_WITH was introduced, and LIKE was renamed to BEGINS_WITH. But that said, one can still use “LIKE” as an operator — there is backwards compatibility for that.

KF: Excellent.

SSH: ENDS_WITH behaves as one would expect from the name: A value is considered to be a match if it ends in the specified substring.

SSH: As is the case with BEGINS_WITH, ENDS_WITH is case-insensitive. All that has to happen is for the substring to match (regardless of case), and it will be considered a match. So, for instance, in example 14, we are matching any record that has an email address that ends in “.net”.

But, even if we had specified “.NET”, we would match the same items.

KF: That’s a great example.

SSH: Sometimes I think that it’s one of the (few?) examples that actually feels like a real-world example to me.

KF: Well not to denigrate your other fine examples, but I agree that #14 feels very plausible. And if I change the result path to “email”, here is the result.

SSH: CONTAINS is really just like a PatternCount type of test, but it does come with a variation that allows for case-sensitivity. In example #15, you can see that we are matching all items which contain the string “Chi” within the street address.

SSH: Example 15 uses CONTAINS, which is case-insensitive. This means that it will turn up matches for street addresses which contain strings such as “Michigan” as well as “Chinook”.

KF: Six objects are returned in example 15 vs. three objects in example 16.

SSH: Yes — exactly. In contrast, since ex. 16 uses CONTAINS_CASE_SENSITIVE, and since we have specified that our match string is “Chi”, we will no longer return matches for strings such as “Michigan” (where the “c” is not capitalized).

KF: Can you elaborate on which features are ‘slow’, relatively speaking vs. the faster ones?

SSH: Sure. It’s an under-the-hood distinction. There are features which necessarily require iterating through *every possible* match element to determine the match results (these are the slower features). And then there are features which can make use of a shortcut which can really cut down on the amount of iteration which has to happen (the faster ones).

For some examples:

Faster: EQUALS, LIKE, EXACT, IN matching.

Slower: Anything with MATCH_ALL, the inequality operations, any comparison to a Date-String.

KF: Range operators?

SSH: Range operators are in the “Slower” category. But — once I got started adding in the “slower” features, I found that the speed was not as lacking as I imagined it would be.

KF: Speaking of range operators, I like it that you give us the “x” options for ranges. Without the “x”-es, range operations behave as they do in FM. With one or both “x”-es, end points are ignored.

SSH: Yes. So, for example, if you want to find values greater than 3, but less than, or equal to 10, you would use:

x...

And that would mean to exclude the lower bound (3 in my example), but to include the upper bound (10 in my example).

KF: That’s very clear. I’m glad you included that option.

SSH: It would have felt incomplete to me without it. The tricky part was just figuring out a way to present the four options that would feel reasonably intuitive.

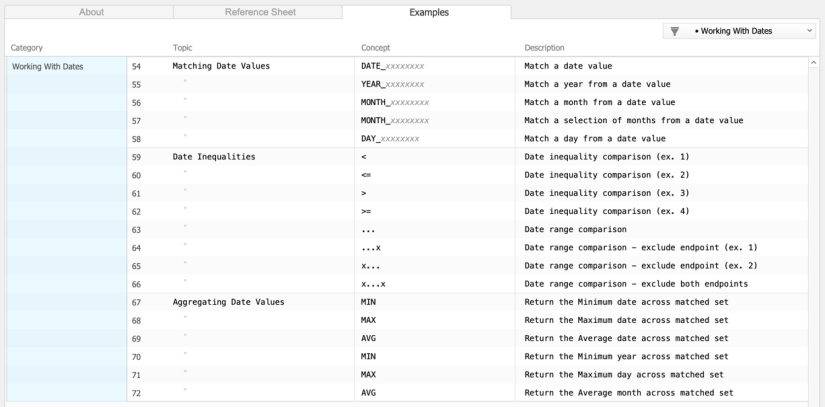

KF: Can we talk about the prefixes and suffixes for dates?

SSH: Sure thing. So, once I was adding features in a more liberal fashion, I just sat down and thought about what I would like to see included. This brought about the inequalities, but it also brought about the desire to match on date values.

Because the format of a string that represents a date can vary considerably, I decided to define some basic formats that would be supported. And this is how the suffixes YYYYMMDD, YYYYDDMM, DDMMYYYY, and MMDDYYYY came about.

SSH: And the ability to have your date separator chars be any of a small number of common ones, e.g., dot, dash, slash.

KF: You have invested a tremendous amount of time and energy into this.

SSH: I think we just got lucky that it came out ok. I was basically building features for myself, but trying to keep them sane. And then, fortunately, I had your feedback to help with the sanity part of it. It was super-valuable to me to be able to share/exchange ideas with you, prior to release.

KF: I appreciated the opportunity. OK, so you’ve explained about the suffixes — what about the prefixes?

SSH: The prefixes are just a few: DATE, DAY, MONTH, YEAR. The prefix tells JSONQuery what type of value you want to pull out of the date to compare against something (or aggregate, for that matter).

KF: For me, examples 58 and 72 really show the power of those prefixes. In example 58 you’re saying return objects where the birthday is the 15th (of any month)…

SSH: Yes — that requires the use of the DAY_ prefix.

KF: And since the result path is empty, return the entire object?

SSH: You are correct. I left the result path empty, just to try to keep the example simpler. And the empty result path does mean return the entire matching object. But there could easily be use cases where maybe we just want to return some portion of the matching object, and we could have used a non-empty ResultPath for that.

And — to hint at the more sophisticated option: We could even include a Transform, to pick-and-pull the data we want to return from the matching object(s).

KF: I’m looking forward to discussing transformations w/ you. But first can we discuss ex 72?

SSH: Example 72 — let’s have a look.

So in example 72, we actually are not using any date value for the purposes of matching. We have used the MATCH_ALL operator, which tells JSONQuery to process every item in the supplied input array.

KF: In the result path we have AVG ( values[*] ; MONTH_YYYYMMDD ).

SSH: Now that we have the ability to tell JSONQuery what format a date string is in, then we should be able to use that in other places, too.

KF: Because in the previous example a date string appeared as a value type, but now it’s showing up as part of the result path.

SSH: And an example of that is applying an aggregate to a date value. All it takes is telling JSONQuery the expected format.

KF: And the source JSON is a bit messy when it comes to the “values” array.

SSH: Ah yes — that example JSON is messy. The reason being is that I was too lazy to cook up a bunch of different examples to illustrate all the possibilities, so that JSON wound up being a true hodge-podge of keys and values.

KF: I’m glad it’s messy. It makes the example more relevant.

SSH: The other thing about this hodge-podge JSON is that it helps demonstrate the behavior that when operating on a date, only JSONString nodes will be included in the operation. That is one of the requirements that, though not explicitly stated, does exist: JSONQuery date functionality only operates on string values. And so, if we play around enough with the examples, we can demonstrate for ourselves that the number and Null values are not being considered.

KF: Makes sense — thanks for confirming. This is a great example, because so often we do encounter messy JSON out in the real world.

SSH: You know, I think I have been fortunate with respect to not encountering messy JSON, but I did want there to be some illustration of how it will be handled by JSONQuery.

KF: So in this example you’re telling JSONQuery to average the month number of all the entries that have a well-formed date inside their “values” array, and to ignore anything that isn’t well-formed.

SSH: JSONQuery is going to shine the most when the data-types are consistent across multiple structures, but in the event that this is not the case, I’d like people to at least have thought about this a bit.

KF: And you’ve explicitly stated your expectation re: what constitutes a well-formed date by specifying the YYYYMMDD suffix.

SSH: Yes — exactly. The YYYYMMDD tells us the order of the date components, and, given that there is support for a few different separators, we could have input such as:

- 2021-12-14

- 2021/12/31

- 2021+12.31

- etc.

KF: Nice and flexible. [Ed. note: I later confirmed w/ Steve that the third example is not a typo — it is okay to mix separators.]

SSH: I’d kind of like to see how the "" is treated. My belief is that it should be ignored, but I can’t remember the code that handles that anymore, so we should see.

KF: Okay, bearing in mind that this is our query…

If I simplify the JSON Input for example 72 like so, and run the query again…

Preliminary testing indicates the "" is ignored, and as one would expect, the “average” month is the same as the month of the one valid date entry.

SSH: Nice test case. Thank you for that.

KF: Okay, now I’ll replace the “null” with a valid date, so the month number of the first valid date entry is 5 and the month number of the second valid date entry is 9…

…and, indeed, ( 5 + 9 ) / 2 = 7.

SSH: So, in case it helps people reading this, I’ll try to back up for a second. JSONQuery tries to define operators that will feel intuitive and standard to FMP users: Equals operations, inequality operations, and aggregate operations. When the nodes within the JSON are JSONNumber data type, there’s no guessing about how numeric functions operate: They act just as they normally would act on numbers. When a node value is a JSONString, and it makes sense to do so, there is a default behavior of wrapping values in GetAsNumber before applying a numeric operation.

And then the Date concept just extends this to create a faux-data-type of “DateString”. When the data type is one of the “DateString” values, then the value gets parsed into a Date by using the supplied format string that we’ve been mentioning above, and then from that date we return the DAY, YEAR, MONTH or entire DATE (this is based on the prefix). And then *that* resultant value is what is passed into the operator, be it a comparison operator, or an aggregate operator.

And so, date-interpretation will hopefully feel very similar to what has already been happening with the rest of what JSONQuery does.

KF: I’m shaking my head in disbelief at the amount of thought and effort you’ve put into this.

SSH: You know, that’s really the fun of building a custom function like this.

KF: As I mentioned to you the other day, I’m running out of superlatives.

SSH: Thanks, Kevin. You can have a lot of features and so forth, but figuring out how to package it without having to introduce a lot of new concepts is the challenge. In the case of JSONQuery, I tried (with only marginal success) to limit the number of concepts, and of those concepts, make them similar to familiar ones. But then there’s this mix-and-matching of features which happens which means the complexity can get very elaborate.

(Which, I think, is both good and bad.)

KF: And nowhere would that be more true than in the output transformations, i.e., examples 130-158.

SSH: Agreed. Do you think it’s a good time to talk about those?

KF: Sí señor.

SSH: Okay, so after a while of working with JSONQuery, I began to feel limited in my options for what I could return from the matched objects. It was great to be able to return a specific value pulled from each matched object, and since that included sub-objects, that did feel pretty useful. But I kept wondering about how it might potentially confound someone who wanted to return something which does not fit the pattern of being a single value at a single path. I imagined that devs would want to be able to “hand-craft” their own output, choosing specific values that they wanted to return. And moreover, that such return values could come from just about any possible path.

KF: So a more accurate name for this CF might be JSONQueryAndOptionallyTransform (just kidding but you get the idea).

SSH: Hah! Yes. That would be a good name. Or, at least, a descriptive one. And so, “Transform” became the official name for this capacity for creating a custom return object based on a matched object. Originally I called it “Harvest”, because it felt like one was harvesting values from the matched objects.

The Transform (a.k.a. Harvest) structure starts out as simple as I could:

- Supply some JSON that has a MAP array.

- The items in the MAP specify from where we draw the value, i.e., the path to the value within the matched object.

- And also each Map Item specifies the path to which that value should be written (in the output).

- Include as many of these “Map Items” as you need to build up your custom output.

KF: And as you mentioned above this (conveniently) takes the place of the ResultPath argument.

SSH: Yes, it does take the place of the ResultPath.

KF: Sweet.

SSH: Sweet — but with one gotcha: If you inadvertently supply invalid JSON for your Transform: JSONQuery will have no idea that what you are supplying is a transform.

KF: You drew the line at fixing malformed JSON. Fair enough.

SSH: I think it’s fair enough, too. But it’s a serious gotcha. Reason being, most of us, when we use a Transform are going to be so wrapped up in what we are doing, if JSONQuery returns empty output due to invalid Transform JSON, we could easily overlook the cause.

KF: So best practice is to test your JSONQuery syntax before deploying.

SSH: I put a “gotcha” entry into the sample file to call this out.

KF: Example 165.

SSH: Yes — keep it in the back of your head that if you see [] as output, that it could mean nothing matched, but it could also mean Invalid Transform JSON. But, on the brighter side, once we get beyond the (real) concern of keeping the Transform valid, it becomes a very powerful tool.

And again, the bread and butter of the Transform concept is to just supply an array (MAP) of SOURCE/OUTPUT pairs, that define what to extract, and where to write it. Everything else about Transforms are either a few bells and whistles, or just the ability to do things that you could already do with the ResultPath.

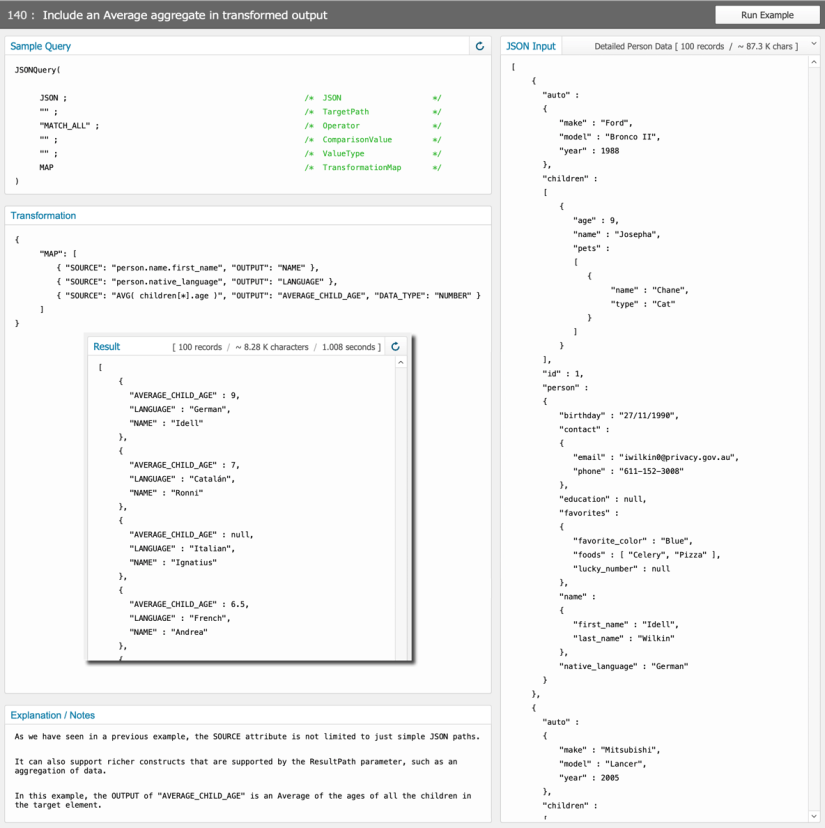

To elaborate on this last point: We already have seen examples of how the ResultPath allows us to leverage an Aggregate operator, to return some kind of aggregation. It so happens that the SOURCE attribute of each Transform Map item supports most (or maybe all) of the constructs that can be applied in a basic ResultPath argument. This means that the SOURCE path of an individual Map Item can also invoke an aggregate.

We can use example 140 to illustrate this.

Example 140 uses a Map which contains a couple of ordinary Map Items, which include a person’s first name, and their native language in the output. But then, it also includes a Map Item which makes use of the AVG aggregate, to include the average age of the children (belonging to the matched person). It’s an example of taking a construct which we’ve already (possibly) seen in the result path:

AVG ( children[*].age )

But example 140 shows us that such a construct is just as at home being used as the SOURCE parameter of a Map Item.

KF: Powerful stuff.

SSH: It is powerful, I think. And it can take a bit of getting used to, but I tried hard to make the SOURCE attribute of a Map Item to have parity with a simple ResultPath. And so, if you have made that investment for learning the ins and outs of the ResultPath, it will hopefully pay some easy dividends in the Transforms.

KF: Agree re: the dividends. Would this be a good time to talk about TEMPLATE?

SSH: Sure. TEMPLATE is what I would call one of the “bells and whistles” of the Transform feature.

To recap on Transform first: Transform allows us to address the case where a dev does not want to return everything from the matched object — they want to pick and choose.

Now, supposing that a dev wanted to keep everything from the matched object, but maybe they wanted to augment that data with some extra properties that should be included in the output. Strictly speaking, they could do this just by using a basic Transform Map, by including a Map Item for every item that exists within the matched object, and then include some additional Map Items for the additional properties.

Kevin Frank: As per example 136.

SSH: But, this would be really tedious. I wanted an easier way for a Dev to add additional properties to an existing matched object (per example 137).

SSH: However, it seemed the best way to do this was to first introduce the concept of an arbitrary template (example 136), and then build on top of that, with the concept of being able to specify that the template should use the matched object as its basis. So, really, it was my desire to offer what example 137 does which drew me to the realization that I should start simpler, with just what example 136 does.

Basic ideas to takeaway:

- A TEMPLATE allows a dev to specify some base JSON that will be used as the basis for each item returned from the Transform.

- It is specified with a TEMPLATE property of the Transform, and now it probably is more evident why the Transform is a JSON object, and not just an array of Map Items — that’s so that we could include the TEMPLATE property as an optional property.

- One thing to know about the TEMPLATE is that it is used freshly as a base for each returned object in the output. By “freshly” I mean that it does not have any concept of “state” which gets updated and then utilized with each processed match object.

- It’s a very simple arrangement: You supply the JSON, and it is used as a template as each object is stamped out.

- And, the act of using the template on one matched object has no effect on any other object’s use of the template.

KF: Okay this is good to know.

SSH: And then, beyond just the TEMPLATE on its own: We have the concept of setting the TEMPLATE to be the actual matched object. It is this ability (example #137) that allows a dev to easily “extend” a matched object with some extra properties.

KF: So just the template on its own is example 136, and then 137 extends the idea.

SSH: Yes, example 136 shows just the template, and 137 shows a special case where the template is set to the matched object.

KF: It’s additive — doesn’t replace anything.

SSH: Yes. That is true. And, since the TEMPLATE is considered a base, that means that items in the MAP array are able to override a value which has been specified in the TEMPLATE. (There’s not much reason to do this if the template is not set to the matched object — but this override capability could be handy in cases where the template *is* set to the matched object.)

KF: 138 is making my head hurt — should we discuss it?

SSH: Oh yeah. Sorry about that. That would make my head hurt, too. As with everything, there was a use case that motivated this feature.

So, the feature that we’re talking about here, is the ability to nest *another* Transform Map, within an item of the usual Transform Map that we just introduced. It is a pretty advanced example. I will say a little bit about it as background, between last interview and this one, we’ve kind of touched upon all of the material in the JSONQuery sample file with the exception of one topic which can be a lot to wrap one’s brain around.

That topic is the use of wildcard arrays: [*]

Wildcard arrays did not exist in the first version of JSONQuery. They were actually the first thing that I pursued when starting this updated version.

Generally speaking, wildcard arrays become very useful for matching against, and returning from, child objects (child in this case, being a child, grandchild, etc.) of objects in the JSON input. So, without getting into details, wildcard arrays make it possible to perform matches based on children, or to return groups of child objects that matched criteria, etc.

To get back to the question of what on earth is going on in example 138…

KF: I see “SOURCE”: “children[*]” in the MAP

SSH: Yes! Perfect. Example 138 is an example of including an array of children in the output, via a wildcard array. This is the kind of scenario where a “nested” Map can be of assistance.

To fire up a FMP analogy: There are a few similarities between creating this sort of custom JSON output using JSONQuery (using a Transform, and wildcard arrays) and doing reporting in regular FMP. In both cases you have to think of the “context” from which you are doing the reporting, and whether or not you need to reach “down” from that context to grab some child data, or perhaps “upward” from that context to grab some parent (or even grandparent) data.

You know how a beginner with FMP oftentimes has to go through the learning bump of realizing that it can be easier to report from a child context (e.g., Invoice Line Items) rather than, a parent context (Invoices) ? It’s related to the kind of thinking that I use for constructing result paths with JSONQuery.

KF: That’s a very useful analogy.

SSH: With JSONQuery, I start with the same level of understanding of what my context is with respect to where the Map items are being applied. And then I think about what I want to include in the output, and I analyze to recognize whether I am looking to include data from my same level of context, or whether I need to look upward or downward. What I realized after doing this for a while, is that there are some cases where I wanted the capability to apply a Map to some child objects that I was obtaining by reaching downwards. And this gave impetus to the inclusion of being able to include a nested Map. The nested Map can be applied to child data, and that is really the only case where it would be useful.

One might question whether or not a nested Map is necessary, wondering if it would not always be possible to just utilize a Map defined at the current context.

Here’s an analogy for this: Suppose in FMP you have a DB that tracks Meetings. Each meeting is going to have 1-n related Person records. We’ll bypass whether this would be a JOIN, and just pretend that we’ve got a one to many relationship…

MEETING ---< PERSON

PERSON contains individual fields for FirstName and LastName, and from MEETING, we could show a list of either of these with: List( PERSON::FirstName ) or List( PERSON::LastName )

But we have to do something that goes beyond a basic List if we want to show a list of…

FirstName & " " & LastName

In other words, List won’t get us that on its own. One obvious old-school option would be to define an additional concatenated field in PERSON, and then we List that field.

KF: Or use ExecuteSQL.

SSH: Indeed — SQL, or a While.

KF: Or a custom function.

SSH: The analogy here is that the nested Transform Map allows us to do some kind of JSON formatting at the child level before we bring that data up to be included in the regular Transform output.

KF: Bingo. Great analogy.

SSH: Okay, I’m glad it worked. To try to summarize: Both the addition of that extra field in the PERSON table example, and the use of the nested Transform allow us to do some pre-processing at the child level before that data gets incorporated at the parent level. Back to just the JSONQuery side of it: It’s definitely an “advanced” topic. It’s one of those things that, until you need it, and you see it work, it’s not easy to appreciate that there might even be a real-world use case that calls for it.

KF: But it seems to me that many things in your demo are straight-forward, so it’s good we’re diving into a concept that may be opaque to many (it certainly was opaque to me before you explained it).

SSH: I think one of the goals with the features of JSONQuery, and the demo file was kind of like the old slogan that I think was used for Perl — something about making easy things easy, and difficult things possible.

KF: That reminds me of these lines from this song:

SSH: My hope is that those who need just the easy stuff will not be daunted by example 138, and instead will just focus on the one or two things that they need, but then those who care to venture further can find some riches, too.

KF: Speaking of further riches, let’s discuss ex. 178.

KF: Given this JSON the goal is to be able to return elements from one column position that match a certain value in another column position.

KF: For example, I’d like to return entries from column [0] where column [1] = 0.

KF: And it works perfectly.

SSH: The only caveat is that there is no optimization that this can offer for performance.

KF: Duly noted. I have immediate need for this particular functionality, and of course I could “walk” the JSON the old fashioned way. But why do that when I have JSONQuery in my tool kit?

SSH: I think that’s what it’s offering you here — convenience. There are a small number of examples after 178 which illustrate how other features of JSONQuery can be leveraged here, too. In particular, I am thinking of aggregation. So that you could SUM or AVG some column of your grid, where some other value meets some kind of match criteria.

KF: Okay, I just checked out 182 and 183… which start with the same 2-D JSON array we saw a moment ago.

KF: Example 182 sums the column [1] values that correspond to Boolean true in column [3].

KF: And 183 averages them.

KF: These are super useful! I would expect that queries like the above will be one of the most common uses of JQ.

SSH: It is great to exchange these thoughts, because the whole spreadsheet concept or grid or array-of-array is not at the front of my awareness. But I know that others (besides you) use it — so it’s good for me to become more aware of this.

KF: I’m sure I’ll be using this particular feature in the coming weeks. So where should we go next? How about output transformations?

SSH: Sure. We’ve seen some of the basics of how one can “pick and pull” the data that they want to include in the output. And, as a recap, this is done by providing a SOURCE attribute, which tells JSONQuery where to pull the value, and an OUTPUT attribute, which tells JSONQuery where to write that value, within the context of the output. This is all pretty straightforward.

But as we work through more advanced examples, what we start to see is that the SOURCE attribute has a handful of “power-user” ways in which it can be used. There’s also a general concept I want to put out there. The concept is that, the SOURCE path that is used for transformations supports any expression that one might have used in a basic ResultPath (without any transformation).

And so, if one has studied some examples and learned a few tricks about how the ResultPath can be used, all of that knowledge can be leveraged with the SOURCE attribute in a Transformation Map Item, as well. So, again staying general for a moment: In the result path, one can use a wildcard array [*], or use an aggregate expression such as AVG, SUM, etc. And those can be used equally well as an expression for a SOURCE attribute.

KF: To be clear, by “ResultPath” we’re talking about the sixth argument.

SSH: Yes — thanks for that clarification. ResultPath is the last (sixth) argument of the function.

KF: And instead of “ResultPath”, the sixth argument can instead be “TransformationMap” as in some of the later examples.

SSH: Also, exactly true. I won’t belabor the point about how the SOURCE attribute can support the same expressions that could have appeared in a ResultPath, but I think it is worth mentioning at least once, and then, hopefully the similarity between the two will start to feel intuitive to devs after a while.

KF: Makes sense. Does the SOURCE attribute make its first appearance in ex. 130?

SSH: Yes. And 130 is a good example of what I would call the “basic” use of how the SOURCE attribute works. In 130, it’s just saying, “Hey, there’s a value over at this path that I want you to include in the final output”.

If we itemize some of the more advanced use of the SOURCE attribute that go beyond the basic example illustrated by example 130, then I would say these advanced techniques are:

- Aggregation of a value (examples 139-141)

- Looking “upwards” to reference “parent” data

- Looking “downwards” to reference “child” data.

KF: This is the explanation I was hoping for. Thanks.

SSH: Why don’t we take a quick look at example # 140, which will give us a look at how the aggregation can happen.

SSH: Example 140 has a transformation which has three items in the Map array. Two of them are of the basic “bread and butter” of Transformation Maps. They gather values (first name, and native language), and specify where those values should be written to. But then there’s a third item in the Map array, and that one uses an aggregate expression. The expression is:

AVG ( children[*].age )

Again this is the sort of expression that we might have seen in a basic ResultPath. Let’s compare example 140 with example 85.

SSH: If we take a look at the ResultPath of example #85, we see that it is what we are using as a SOURCE attribute in example #140. And basically, it is an expression that says, “Hey, I’ve got this array of children elements, and I’d like you to return me the average of the ages of those child elements.” When we did it in Example #85, that average value was the only thing that was returned.

But, in example 140, now that we are using a Transformation, we can not only include this aggregate value, but we are packaging it up with a first name and a native language value.

KF: This is really powerful… it just takes a little time to wrap your head around.

SSH: Had we wanted to include the MIN or MAX age of those child elements, the adaptation to the expression is exactly what you’d expect — just swap in the other aggregate function. It does take a while to wrap one’s head around, but I tried to build everything with a consistency so that, once you do wrap your head around it, and play with it for a while, there may be an intuitive feeling for things because of that consistency.

KF: Completely agree. The more I use JQ the clearer it becomes.

SSH: I sincerely hope that will be the case for most devs who use it.

KF: I’m looking at example 140, and the result is…

SSH: The result is a distilled version of the input array, where each output item includes just the first name and the native language values, but then it is also hot-rodded with the inclusion of that average child age aggregation. Incidentally, that screenshot illustrates what kind of output we see when we attempt to aggregate an empty set (re: the average age of Ignatius’ children).

KF: That particular “children” array is empty?

SSH: Yes. I just double-checked. His children array is just [].

KF: So JQ intelligently accommodated that.

SSH: There’s not really a lot of choice about it, right? Because if JQ were to return 0, that could be very misleading; one would be left to wonder if the input array was [ -1 , 2 , 1, -2, 0 ] or just [].

KF: JQ returns NULL not zero in this case. Presumably it would return 0 in the example you just posited.

SSH: Exactly — yes. I wanted some way to have the AVG output reflect those two possible cases with distinct output. And so I chose either NULL or “” for the empty array case. But an actual numeric value any time that the array was non-trivial with respect to numeric values to average.

KF: Zooming out to comment on the big picture, speaking of the configuration of the map, it’s great that the TransformationMap is JSON. You could have done it some other way, but it makes perfect sense to do it as JSON.

SSH: Glad you think so — it was easiest for me, that’s for sure.

KF: So what should we look at next?

SSH: I’m thinking it might be a nice idea to touch upon the other two sort of “power” uses of the SOURCE attribute: Look up/down to parents/children. To consider this particular use of SOURCE, I think that we should briefly mention the concept of a “BASE_PATH” of a transformation. The BASE_PATH attribute is introduced in example 142.

SSH: We can think of the BASE_PATH as being somewhat analogous to choosing the FileMaker Context when you are getting ready to do reporting. As we may have mentioned earlier, in an example of generating a report in FileMaker for an Invoices solution, one has to think about whether the goal is best met by doing the reporting from the Invoices context, or the InvoiceLineItems context, or perhaps even an Account context or a Product context. Each different context will allow for different possible report options.

KF: Absolutely. It was a turning point for me as a FileMaker developer when I understood this.

SSH: And, before doing much else, one has to make that decision about which context they are reporting from. In FileMaker, as we all know, we establish context by going to a Layout (because the Layout is based on particular TableOccurrence which sets/is the context).

With Transformation maps in JSON query, it can also make sense to think about the context of where the Map Items are being evaluated. And, if one is at the point where they are making such a decision, and being very deliberate about evaluating the transformation Map items from a certain context, it may be necessary to explicitly state what that context is. In JSONQuery, there’s no such thing as going to a layout to specify the context — instead, what we do, is we supply a BASE_PATH attribute as part of the Transformation, to tell JSONQuery what the target context is.

KF: This is really powerful. And worth thinking about.

SSH: It is. Once we accept that we have this concept of setting a context with a BASE_PATH (ex. 142), we can then talk about the fact that sometimes one needs to draw data from something that is related to our context. Again, a FMP reporting analogy could continue to serve us.

So, there are two basic directions that one can pull data from that are what I consider “power” or “advanced” usage of the SOURCE attribute: One is to look upwards to grab data from a “parent”. This would be analogous to including Invoice details from the context of InvoiceLineItems, i.e., we are looking “upwards” to the parent Invoice to grab some data. The other is to look “downward” into a child element. The analogy here would be to bring in some data from a Product, while in the context of InvoiceLineItems.

And, in both cases, assuming that the data exists, we are not limited to looking only one level of depth away. There is support for looking upwards to grandparents, or downwards to grandchildren, etc. Let’s see if we can find some specific examples, to make this a little bit more concrete.

KF: Good idea.

SSH: I think Example #145 is a nice one to look at for the case of “looking upwards” to parent data.

SSH: So, for starters (in example 19), a BASE_PATH has been set, which establishes the context as a set of all Children elements. Setting the BASE_PATH to the context of the children means that all SOURCE attributes in the Map items will be evaluated from the context of these children objects.

KF: Makes perfect sense.

SSH: And so, when we see

"SOURCE" : "name"

what that means is that we are targeting the “name” attribute of one of the children (an element in the children[] array).

KF: Since you set the “base path” you don’t need to spell it out every time inside the map. And anyone who’s used UNIX or DOS will know that “..” in a path means “go up one level”.

SSH: Exactly correct. And if you did try to spell it out, it would not work.

KF: Duly noted.

SSH: Yes — another example of trying to bring in nomenclature that has an established meaning, rather than inventing something new. So, exactly as you have mentioned, the

../person.name

path is telling JSONQuery, “Hey, go up one level to the parent of the element that you are sitting on, and from there, grab the value that can be found under person.name”.

Which, to go back to the FMP reporting analogy, is like using a relationship between Invoices and InvoiceLineItems, to include some Invoice data, e.g., Invoice Date, in the report, while reporting from the context of InvoiceLineItems.

KF: And as we all know by now, a “value” in JSON can itself be JSON (i.e., an object or an array).

SSH: Ah — interesting. I had forgotten that parent.name points to a value which is actually a JSONObject.

As a variation, we could have used a SOURCE value of something like

../person.name.first_name

if we had wanted to grab just the parent’s first name. Again, once there is some familiarity/comfort/fluency with manipulating paths that point to values in the JSON, we see that we have a lot of options for what we can grab.

But the takeaway here is what you called out: use ../ as a means to go up one level in the structure.

KF: Let me demo what you suggested a moment ago. If we make these changes…

…we will get this as a result.

SSH: One issue, which might be a point to discuss, the data type values are: ARRAY, OBJECT, STRING, NUMBER, NULL (and are documented in example 133). If you leave the data type empty, as per your example above, it will default to STRING.

KF: Thanks for pointing that out.

SSH: Sure. To wrap up the general topic of Output Transformations, we can mention the “Structural Conversions” covered in examples 155-158. The basic idea behind these examples is that you might start with input which is an array of JSON objects, but then apply a transformation that returns a JSON Object structure. Or vice versa, you may start with input which is a JSON Object, but then apply a transformation which returns a JSON array.

In other words, these are converting from one structural type to another.

KF: Okay, I’m looking at 155 now.

SSH: Example 155 is an example of taking input which is a JSON array, and transforming it to output a JSON object structure. To do this, we have to have a value within each element of the supplied array, which can serve as a unique key.

KF: You start w/ an array, but because the email addresses within that array are unique, you can turn things around and generate a JSON object where each top level key is the email address.

SSH: Yes. Perfectly stated!

KF: 155 is a great example, and you make a point in the notes that the “key” value must be unique (for obvious reasons).

SSH: Yes — that uniqueness is required.

Example 156 does the opposite of 155 and transforms an object into an array.

SSH: Essentially, we are starting with a giant JSON object as input, and we are returning a JSON array instead. The values of the output array are the “top-level” elements of the input JSON object.

Example 156 also shows how we can retain the original key used to store each element within the input object. Instead of that key being used as a key within an object, it is sort of “tucked into” each element returned in the array. This is optional — if the original key is not needed, it does not have to be included, but the option is there to include it.

KF: That’s a great option.

SSH: I think that these sorts of array <–> object transformations are a little bit in the “niche” category of features, but I could see needing them from time to time, and, if I were going from object to array, it seemed natural to provide a means to retain the original key. (Just to be a bit more concrete, in example 156 the original key from the object input, is being stored under an attribute named “id” in each output element).

KF: What’s going on in example 157?

SSH: Example 157 illustrates that it is possible to take input which is a JSON object, and replace the top-level keys in that object with alternate keys, drawn from the values stored in the object. Similar to example 155, in order to perform this type of transformation, it is necessary to have a unique (and always populated) attribute that one can pull from each child object to serve as an object key.

KF: Really nice example there. I was thrown for just a second because the elements moved around due to re-alphabetization of keys during the transformation.

SSH: Ah — yes. That would make sense.

KF: It does make sense. And now we come to example 158.

SSH: Example 158 is a little bit more lightweight. Strictly speaking, it does not really feel like a structural transformation, but it bears a certain similarity to the other examples in that category such that it made sense to me to include it there. Example 158 illustrates that it is possible to include a property in each output object which tells the original array index under which the matched object was found. In this example, MATCH_ALL is being used, so the effect is that every element in the output array simply includes an attribute (“array_index” in this example) which contains the index of that object in the original input array.

KF: It could come in handy.

SSH: Maybe. This is one that I am not sure anyone will need, but it was not difficult to add in in its basic form.

It might help to further illustrate: If we were to change the query from MATCH_ALL to specifically matching some attribute value, then we’d have another example which might help clarify. So, lets say we changed the example to look like this:

This would match just one array item from the input (the last entry). And the result would look like this:

We can see the “array_index” value is 249 (corresponding to the last entry), indicating that these array index numbers are not relative to the output — they are relative to the original input. (If someone were to just casually look at the original example 158, this could be overlooked and/or misunderstood.)

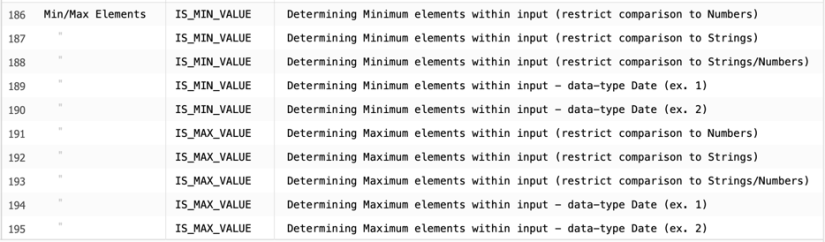

KF: There’s one more set of new additions we haven’t discussed yet: the Min/Max elements in the “Extras” section.

Update 2 Nov 2024: Due to the 30K-character custom function length limitation, these two operators have been removed from JSONQuery v2, but good news: the functionality is still there — it just requires two calls to JQ rather than one. These examples are still present in the v2 example file, with work around code for each provided in the Explanation/Notes section. See comments section for one example of work around code.

SSH: Ah. Thanks for mentioning those. Examples 186-195 showcase two new operators that I selfishly made for myself, because I sensed that I might need them in the coming months for a project that is on the horizon.

KF: I have a feeling this “selfishness” as you call it will benefit others as well.

SSH: I hope so. These new functions could be called “convenience” functions, because, strictly speaking, one could use JSONQuery to accomplish what they do without them existing, but it’s just that doing so would take a couple of calls to JSONQuery, and I wanted something which could allow me to get the job done in a single function call.

How about if we take a look at example 186?

SSH: We’ve seen before that JSONQuery can look at an input array, and return the Maximum or Minimum value that exists at a particular path within each array element.

I was very proud of this feature, but when I started looking ahead at a project in mind, I realized that I might come across a case where I don’t just want to know what the maximum (or minimum) value is, but I might also want to know more information about the element which contains the min/max value.

I basically wanted some kind of “Hey – figure out what the min/max value is, and then return me the element that has that min/max value” type of function.

And so, formalized, this became IS_MIN_VALUE and IS_MAX_VALUE.

So, looking at the example, we see that we are using IS_MIN_VALUE to return those “auto” elements which happen to have the earliest (minimum) year attribute value. In other words, “Return me data from the records with the oldest cars.” Since there could be more than one such element, the data is still returned as an array.

Another detail to call out with this example is that the ValueType parameter has been set to JSONNumber.

Similar to other behavior of JSONQuery, there is attention to data-type, and so specifying JSONNumber means that only nodes of type JSONNumber will be considered, both when determining the minimum value, as well as when finding matches to that minimum value.

KF: This is really powerful. Many times I’ve wished for a capability like this for native FM searching. It’s not that we can’t do it… it’s that it requires multiple steps, but here you’ve short circuited the process so it’s a single function call.

SSH: Thanks. The subsequent example, #187 is similar, but instead of a number, the constraint is that only nodes of type JSONString will be considered. And this is achieved by specifying a ValueType parameter of JSONString.

SSH: Finally, example 188 allows for the data-type of the target value to be either a JSONString or a JSONNumber. This is accomplished by setting the ValueType parameter to an empty string.

As with some other aspects of JSONQuery, there’s this nuanced ability to weed out or include data based on data-type. For the case of IS_MAX_VALUE and IS_MIN_VALUE, I am expecting the most common use case will be just to leave the ValueType at “”, and allow for both JSONNumber and JSONString values. But, if someone does have a more nuanced need, it should be supported.

KF: Really nice.

SSH: So — now that we have this concept established of “finding the minimum or maximum” value in a set, and returning the associated element, it was natural to extend this concept a bit. And by that, I mean that we could “cross-pollinate” this idea of IS_MIN_VALUE with the previously seen ability to have JSONQuery interpret date-strings.

And the result of that is shown in examples 189 and 190. We are still hanging onto the same concept of “Hey, find and return me the one with the minimum/maximum value”, but we are including a date format string which tells JSONQuery that the values in question are dates which have been stored as strings.

KF: In 189 you find the earliest date for birthday (returning one result).

KF: In 190 you find the earliest year for birthday (returning three results).

KF: And, in both cases, since the result path is empty you return the entire object(s).

SSH: Exactly. I’ll mention that the usage of the date format strings is similar or identical to what we have already seen for matching dates. If one has learned the ins and outs of matching dates, these examples should feel fairly easy to understand.

KF: So in 186-190 we have MIN values. And examples 191-195 show the same functionality but for MAX.

SSH: Exactly. Thank you for mentioning that. As a companion to the IS_MIN_VALUE operator, there is an IS_MAX_VALUE operator, which is the same concept, but instead of returning elements corresponding to minimum values, it is naturally returning elements corresponding to maximum values.

KF: Wow. We have covered a huge amount of ground. Would this be a good time for a high-level recap?

SSH: Can do me the favor of reminding me of the alternate name for JSONQuery that you had? That name is a very good way to begin to recap all that it does.

KF: JSONQueryAndOptionallyTransform.

SSH: If we went over the top, we might say something like: JSONQueryAggregateAndTransform

KF: Yes.

SSH: And those are the basic things it does:

1) Query: Allows for finding elements that match a supplied value, with a variety of options for operators.

2) Aggregate: Allows for returning an aggregate, e.g., SUM, AVG, LIST, etc., of values taken from either the matched elements, or all of the input.

3) Transform: Allows for customization of how the results are returned, with the option to pick-and-choose what attributes are included in the output, and how they are named.

Basically, those three things are what JSONQuery does. Sometimes it is fast. Sometimes it is not. When it isn’t fast, expect similar performance to what you would get if you were to write your own While() loop to process the input.

And lastly, the nomenclature for the various features, operators, etc., does its best to pull from familiar nomenclature. Sometimes this is from FMP, sometimes from SQL, sometimes from other sources, but there is an attempt to offer a sense of familiarity.

And I guess (really) lastly: Many of the features can be combined with one another. This ability to combine features, e.g., aggregation with a transform, or matching with aggregation, etc., bring a large variety of possibilities to the table.

KF: That is a world-class recap.

SSH: Thanks, Kevin. I think it will probably do! Do we have a little more time to mention where the source is and why it is minified in the function?

Minification

KF: Absolutely. Minification: what is it and why was it necessary?

SSH: If one takes a peek into the code in the custom function, it’s pretty notable that, beyond the header at the top of the function, there has been a transformation applied to the code that compacts it quite a bit.

This “minification” of the custom function code was done to save space — I was well over the 30K character limit with JSONQuery.

KF: That’s a great reason to minify.

SSH: And also, given that I like to use long and descriptive variable names when I write functions, there was no chance that I could just “trim a little here and there” to get it down to size.

The true un-minified source to JSONQuery is over 124K characters, so it became important to apply a routine that was very aggressive in minifying the code. Comments and unnecessary whitespace are removed. And all variables are subject to renaming, so as to reduce the length of the variable names.

KF: Speaking of the unminified source…

SSH: Good point to mention that. The unminified source is included in the JSONQuery FMP file. To get to all recent versions of the source code (including some previous un-released iterations), one only has to go into layout mode, and open up a window to the last layout in the list. That will take you to a table where all of the source code is stored.

KF: If people want to trace the evolution of this CF, they can navigate to the “JSONQuery Source Code” layout, and explore each version in un-minified splendor.

SSH: I wanted to make sure that everyone had the (unminified) source available to them, so that any curiosity or concern about what is taking place under the hood could be satisfied.

KF: Good thing FileMaker text fields can accommodate considerably more data than the 30K calculation character limit.

SSH: Yes — very fortunate to be able to store the code in a single field without having to go to any trouble.

Closing Remarks

KF: Steve, it’s been a privilege and a pleasure having these conversations with you, and learning about JSONQuery. Your custom function and accompanying demo file are tremendous gifts to the FileMaker community. Thank you.

SSH: Well, Kevin, if I haven’t said it yet (or even if I have), you can certainly take a lot of credit for this function both evolving and being shared. It has been through the numerous exchanges that you and I have had that it has been shaped, refined, and brought into its current form. And I am sincerely grateful to you for that.

Had someone asked me a year ago if I thought that JSONQuery would reach its current state, I probably would have said “no”, but here we are, and I am very glad. Thank you, too.

Steve Senft-Herrera has been developing using FileMaker Pro since 1994. He started working at Beezwax Datatools in 2015, and he credits the collaborative and immensely talented team at Beezwax as being an inspiration to learn and grow every day. Outside of the world of FileMaker, he enjoys cycling, music, being around animals, and being silly.

An “easter egg” from SSH — if you change example 1 as per this screen shot, you will get back the array index positions of all matching items.

(And then you can reset the example by clicking the button at the top right.)

Here’s a (somewhat contrived) example of using the above to update selective nodes… in this case to document that recall notices were issued to customers with cars having model years 1999 or 2000.

A similar trick for objects — change example 30 as per the screen shot, and your query will return corresponding object keys.

I’m wondering JSONQuery do this kind of transformation?

Source =

[{"absType":"Injured","idStudent":"R001292","name":"Charlie","regDate":"04/01/2022"},

{"absType":"Injured","idStudent":"R001343","name":"Zac","regDate":"05/01/2022"},

{"absType":"Injured","idStudent":"R001517","name":"Rosie","regDate":"05/01/2022"},

{"absType":"Injured","idStudent":"R001517","name":"Rosie","regDate":"06/01/2022”},

{"absType":"Injured","idStudent":"R001292","name":"Charlie","regDate":"07/01/2022"}

{"absType":"Injured","idStudent":"R001517","name”:”Rosie,”regDate":"07/01/2022"}

]

And output =

[["04/01/2022”,"Charlie"],

["05/01/2022”,null,”Zac”,”Rosie”],

[“06/01/2022”,null,null,”Rosie”],

[“06/01/2022”,Charlie,null,”Rosie”]

]

Or even better

[["04/01/2022”,"True"],

["05/01/2022”,null,”True”,”True”],

[“06/01/2022”,null,null,”True”],

[“06/01/2022”,True,null,”True”]

]

To which I would add a new zero “row” of

["Date","Charlie","Zac","Rosie"]Remove the square brackets and save as CSV.

Hi Paul,

Thanks for the good puzzle. The short answer is that JSONQuery does not have the sort of “group by” feature that would be needed to perform this sort of transformation on its own. Definitely a “group-by” feature would be cool to have, and I did consider something like it at one point, but I could see that it would quickly exceed what I could pack into the code.

I think that the best that JSONQuery could do in this case would be to provide some assistance in simplifying a script or calculation that would produce the final output.

For instance:

– JSONQuery could be used to harvest a list of dates from the input.

– That list could be used in a loop which builds up the output.

– Each iteration of that loop might use JSONQuery to get an array of students which are associated with the target date for that iteration.

– The script/calculation would then have to manually assemble the format that you are looking for.

So:

It would still leave a fair share of the work to your script or calc, but it could help simplify a couple of the steps along the way.

Hope this helps, and glad to see the interest!

-Steve

Steve, Thanks for the detailed reply. I didn’t think I could use JSONQuery alone, but I thought I’d check as the CF can do so much.

My plan (which I have used before albeit before JSONQuery) is to get a list of students and use their position in the list as their array index.

The loop over the original array to create one array for each date with true at the index determined position of the student name in the first list.

It’s great to see how you have continued to pursue the enhancement of JSON functionality since our conversation a few years ago regarding the fasted way to convert large numbers of records to JSON.

Thanks for sharing JSONQuery, did you release a new version after this article?

Hi Paul,

Thank you for the reply and positive words. The methodology that you describe for getting the desired output is brilliant, and a good example of why I enjoy these types of interactions — I don’t think that it would have occurred to me, at least not immediately, and yet it is plain to see as a great option, once pointed out.

As for an update to JSONQuery:

Though it sometimes tries my patience to sit in a holding pattern, the truth is that I have had an update almost ready for release for quite some time now, but not yet released. The hold-up is that I want to write a good number of additional test cases before making it public, and I simply have not made the time to sit down and write them. It will be a relief when I finally get it out there, as I have been waiting so long, but also because I think it will be a good place for the code to sit for a while and and establish whatever place it will in the dev toolboxes of those to whom it speaks.

Hope this finds you well, and really appreciate your input.

Thank you.

Thank you too for your kind words. I understand wanting to add the necessary examples. Where should we keep an eye out for notification that the new version is released?

For news of a new release, I would definitely keep an eye on FileMakerHacks.com. Kevin and I have talked about possibly doing another interview when the JSONQuery update is ready to go public, and while I don’t want to speak for Kevin, I believe the he and I are still both thinking that doing an interview would be a good idea, and also fun to do.

Many thanks to you, Paul.

Absolutely. Ready when you are, and looking forward to it.

I’ve been utilizing this for a couple of years, and it’s been fantastic! Encountered a single problem recently (though it’s worth noting that the data is unusual).. When running the function (using any parameters) on this json:

[{

"Clinic_Department": "",

"Clinic_ID": "CL12345",

"Clinic_Name": "Test"

},

{

"Clinic_Department": "",

"Clinic_ID": "CL19999",

"Clinic_Name": ",Whatever"

}

]

It returns:

?ERROR: Malformed Json parameter supplied.

If I remove the comma before “Whatever” it works.

Hi BenG,

Thank you for posting your comment, as indeed, it is a bug, and I am glad that you found it and brought it to my attention.

It so happens that this bug is resolved in the next version of JSONQuery which has been waiting for about 18+ months for me to release to the general public. I will do my best to get that update to JSONQuery released before the end of this year. I have a lot of test cases that I would like to confirm that the update passes before I release it. The hold up is not the writing of the code, but rather confirming that my test cases are all accurate — there are over a hundred of them, and sometimes verifying the correct result (without the use of JSONQuery) is a time-consuming task. But, as I said, I will do my best to try to complete this work before the end of the year, so as to have an improved version of JSONQuery released, which also fixes this bug.

Thank you again. I welcome any further thoughts/comments about this, or any other aspect of JSONQuery.

All the best,

-Steve

Hey Steve, much appreciation for creating this incredible tool that has become indispensable. Eagerly anticipating the next version!

Yes, yes, and yes.

Thank you so very much, BenG. Your comments (both the note on the bug, and the note of encouragement) are very much appreciated.

Regarding the name concatenation scenario mentioned above, it seems like that could be similar to Example #145, but I was wondering how you would combine the values from two separate keys. In Example #145, the parent’s name is a JSONObject, with “first_name” and “last_name”. If you wanted the result to show the parent’s FULL name as a string value, rather than just the first/last or an object, I’m not sure how to do it. It feels like the LIST command might help, although that is for merging array items together, not separate object keys’ values. I also looked at Example #156, “Restructure a JSON object into a JSON array”, but I’m getting tangled trying to figure out how many nested TRANSFORM operations I would need to do to turn the following into [“Idell”, “Wilkin”]:

{"first_name" : "Idell",

"last_name" : "Wilkin"

}

Am I missing something? It seems like the tricky part is how to have the SOURCE be more than one JSON path, or perhaps on how to have the OUTPUT be an array index. I didn’t see examples for either of those, but perhaps I just didn’t notice it?

Hi Dan,

Thank you so much for leaving a comment. Having read and appreciated many of your posts on forums, it is nice to see that you have taken a look at this CF.

Your observations all seem spot-on to me. I’ll try to address them:

1) Correct that there is not a way to do a concatenation of values coming from different nodes of a matched JSON object.

So, for example, if a matched object were:

{ "first_name": "Joe", "last_name": "Smith" }there is no JQ/Map feature that can output something like:

{ "full_name": "Joe Smith" }It would be totally cool to be able to do that, and it was a feature that I did toy around with a few years ago. I nixed the idea because it felt like it would be an attempt to cover more ground than I could do well. Essentially, I was playing with some templating constructs that could be used in the MAP feature. The ideas felt really cool, but it also felt like I would have opened up a Pandora’s Box of issues that I might not be able to support. I also figured that a “templating” JSON –> String function/script/js-solution is something that someone else would likely develop, and hopefully with a strong implementation. As such, I felt ok about not attempting that feature.

Likely it was just some analogy that I used in the discussion with Kevin that made it seem like I was saying that JQ could do such a concatenation. It’s not what I meant to imply, however, and I apologize for any consequential confusion.

2) It is possible to have a matched object such as:

{ "first_name": "Joe", "last_name": "Smith" }be mapped to an array structure such as:

[ "Joe", "Smith" ]In other words, instead of being tied to having output such as:

[{ "first_name": "Fred", "last_name": "Flintstone" },

{ "first_name": "Wilma", "last_name": "Flintstone" },

{ "first_name": "Dino", "last_name": "Flintstone" }

]

A map could be used to return output such as:

[[ "Fred", "Flintstone" ],

[ "Wilma", "Flintstone" ],

[ "Dino", "Flintstone" ]

]

I’m not sure if that is much help to you, but in case it is, I’ll share some examples to illustrate. Not every potential restructuring to an array will be possible, but basic possibilities will work. The “secondary map” feature (Example 138) does open some additional doors, but I have decided to pull that one feature (the “secondary map”) from the function.

3) As just mentioned above, the “Secondary Map” (Example 138) feature has been pulled from the next version of the JQ function. Removing a feature that was already part of the function as publicly released is not something that I took lightly, but I think that the trade-offs were worth it. My motivations for removing it were that, in hindsight, the secondary map feature is something that is extra-extra-challenging to understand and learn to use, and it does not perform great. My hope and suspicion is that no one was making use of it. In exchange for removing that feature, I was able to add some quality of life improvements to JQ which I feel are more accessible and helpful to devs.

Thank you again for posting your comment/question, Dan. Please do not hesitate to reach out if anything still requires some clarification.

Some examples of outputting array structures should be below.

-Steve

These examples can be used against the basic sample data used in the first examples of the JQ demo file. Again, not all potential restructuring will be possible, but hopefully these examples will shed some light on what can be done, and how…

Example 1) Specifying an array template value to tip off JQ to use an array structure:

JSONQuery(JSON ;

"" ;

"MATCH_ALL" ;

"" ;

"" ;

"{

\"TEMPLATE\": [],

\"MAP\": [

{ \"SOURCE\": \"person.name.first_name\", \"OUTPUT\": 0 },

{ \"SOURCE\": \"person.name.last_name\", \"OUTPUT\": 1 }

]

}"

)

Example 2) Similar to above example, but operating on a child array:

JSONQuery(JSON ;

"auto.make" ;

"EQUALS" ;

"Ford" ;

JSONString ;

"{

\"TEMPLATE\": [],

\"BASE_PATH\": \"children[*].pets[*]\",

\"MAP\": [

{ \"SOURCE\": \"name\", \"OUTPUT\": 0 },

{ \"SOURCE\": \"type\", \"OUTPUT\": 1 }

]

}"

)

Example 3) Inserting an array structure into an object element. This one is analogous to doing an Invoice report from the context of Invoice_Line_Items. The limitation is that, once you have chosen that particular child context, you are locked in, and consequently, possibilities for simultaneously doing other restructuring is limited or impossible.

JSONQuery(JSON ;

"auto.make" ;

"EQUALS" ;

"Ford" ;

JSONString ;

"{

\"BASE_PATH\": \"children[*].pets[*]\",

\"MAP\": [

{ \"SOURCE\": \"../name\", \"OUTPUT\": \"child\" },

{ \"SOURCE\": \"name\", \"OUTPUT\": \"pet[0]\" },

{ \"SOURCE\": \"type\", \"OUTPUT\": \"pet[1]\" }

]

}"

)

One more example which I did not consider in my earlier reply.

It uses two passes of JQ to yield the output with a concatenated full name.

As you very aptly noted, the LIST function is used to join array values, but first one has to reshape things into the array structure. I did not see this possibility earlier today, but I think that you already were envisioning that it might be possible. Kudos to you for seeing that…

Let([~map1 = "{

\"MAP\": [

{ \"SOURCE\": \"person.name.first_name\", \"OUTPUT\": \"full_name[0]\" },

{ \"SOURCE\": \"person.name.last_name\", \"OUTPUT\": \"full_name[1]\" }

]

}" ;

~map2 = "{ \"MAP\": [ { \"SOURCE\": \"LIST( full_name[*] ; 32 )\", \"OUTPUT\": \"full_name\" }]}"

];

JSONQuery(

JSONQuery( JSON ; "" ; "MATCH_ALL" ; "" ; "" ; ~map1 );

"" ; "MATCH_ALL" ; "" ; "" ; ~map2

)

)

Thank you for the kind words, and for the feedback here. I feel like I’ve been missing out by not digging into JSONQuery years ago. Your very thoughtful and helpful responses pointed me in the right direction. As I was reading them, I began to wonder if nested JSONQuery calls might be the answer. Sure enough – that was what did it.

And, I can imagine how complicated it would be to add key-merging as a top-level transform in the function. It seems unnecessary when the same thing can be done with a subsequent call to JSONQuery. There’s a point where simplicity of calling the function has to give.

So, using Example #145, if you want to get the children’s names, along with each child’s parent’s full name, I was able to put together your advice into this:

/* Note that if you are using the Example FMP12 file, the code below should be pasted into the ‘Sample Query’ field, not split across the Query and Transformation fields. */

Let([~map1 = "{ \"BASE_PATH\" : \"children[*]\",

\"MAP\": [

{ \"SOURCE\": \"name\", \"OUTPUT\": \"NAME\" },

{ \"SOURCE\": \"../person.name.first_name\", \"OUTPUT\": \"PARENT[0]\" },

{ \"SOURCE\": \"../person.name.last_name\", \"OUTPUT\": \"PARENT[1]\" }

]

}" ;

~map2 = "{ \"TEMPLATE\": \".SELF.\", \"MAP\": [ { \"SOURCE\": \"LIST( PARENT[*] ; 32 )\", \"OUTPUT\": \"PARENT\" }]}"

];

JSONQuery(

JSONQuery( JSON ; "" ; "MATCH_ALL" ; "" ; "" ; ~map1 );

"" ; "MATCH_ALL" ; "" ; "" ; ~map2

)

)

I stuck with trailing semi-colons out of respect for the author. :-)

Hello Dan,

Seeing your reply and its accompanying example truly made my day. Your example so perfectly nails numerous concepts that go beyond the obvious stuff — I was delighted to see it.

For the benefit of anyone else reading the comments on this page, the example in Dan’s post illustrates:

1) The use of wildcard arrays to group child elements as a single collection

2) The use of a Map to hand-pick data and rename keys

3) The use of the ../ path directive to look upwards from a child to a parent element

4) The use of dual passes of the CF, for more sophisticated processing

5) The fact that Map SOURCE elements can use aggregate functions, e.g., LIST

6) Using an aggregate with a special optional param (in this case, a custom delimiter for LIST)

7) A smart technique that I had never seen or thought of before, which is to use the LIST aggregate as a means to concatenate data from distinct nodes.

That’s an amazingly satisfying list of things for me to see. It made me very happy, and, in fact inspired. Thank you for posting it, Dan, as well as for this exchange.

It’s difficult for me to put an ETA for the next release of JSONQuery — it is waiting for me to do some QA on it. But I would like to mention that one of the quality-of-life improvements that it offers is a sort of “shorthand” notation for the Map JSON, which allows devs to use “faux-JSON”, where the need for double-quote chars is reduced, if not eliminated entirely. Removing the need for all of the backslash-escaping makes reading and writing these types of calculations much less cumbersome, and more enjoyable.

Wishing you well. Thanks again, Dan, for sharing your thoughts and insights as you did. I am grateful to you.

Hi Dan, just a quick note (perhaps for the benefit of other readers, as I’m guessing you are already aware of this) to say that now that JQ v2 is out, the above example can be re-written to take advantage of the new streamlined “pseudo-JSON” MAP syntax.

Let([~map1 = "{ BASE_PATH: 'children[*]',

MAP: [

{ SOURCE: 'name', OUTPUT: 'NAME' },

{ SOURCE: '../person.name.first_name', OUTPUT: 'PARENT[0]' },

{ SOURCE: '../person.name.last_name', OUTPUT: 'PARENT[1]' }

]

}" ;

~map2 = "{ TEMPLATE: '.SELF.',

MAP: [ { SOURCE: 'LIST( PARENT[*] ; 32 )', OUTPUT: 'PARENT' }]}"

];

JSONQuery(

JSONQuery( JSON ; "" ; "MATCH_ALL" ; "" ; "" ; ~map1 );

"" ; "MATCH_ALL" ; "" ; "" ; ~map2

)

)

P.S. To belatedly echo what Steve said… that is an impressive and inspired use of JSONQuery’s MAP capabilities.

I had skimmed this a while back and recently had use case for it. Long story short(-ish), a client needs to get some data from a web site that doesn’t have an API. We are scraping the page, which we know is fragile. The site recently had a redesign which of course broke things. The new code has a bit of JSON in it… great! The bits of info we need are in an array within that JSON with a few hundred items. We match the “name” key and return the value of the “value” key. JSONQuery was a perfect solution.

But we sometimes get the “?ERROR: Malformed Json parameter supplied.” result. FileMaker thinks the JSON is fine. I’m not sure if it is the same issue that BenG mentioned above. There are a couple entries that have some gnarly entries with some Base64 data, so there are commas in there, if that is the cause.

Found another way around it, but reach out to me if you want another example to test with your latest version.

Thanks!

-Shawn

Hi Shawn,

Thank you for bringing this up. I would really appreciate another example where the “malformed JSON parameter” error erroneously is returned.

Both so that I can understand, and also test whether or not the issue exists with the next build of JSONQuery that is waiting to be released.

I will DM you via a forum to see it is possible to obtain an example from you.

Thank you, Shawn.

-Steve

Hello,

Just an update to clarify what Shawn helped to uncover:

– What Shawn mentioned above is another instance of the same bug that BENG also reported.

– The Bug is that JSONQuery will incorrectly reject input as malformed if there is a string node/value in the JSON which leads with a comma character.

An example would be:

{ "a": ",hello" }– This bug is fixed in the next/upcoming version of JSONQuery, so the responsibility is on me to finally finish the QA work on that version so that it can be released.

– I completely acknowledge that it has been taking me a very long time to do that QA. I will do the best that I can to give some attention to this task soon after mid April.

Many thanks to both Shawn Krueger and BENG for helping me out by sharing their examples of having encountered this bug!

Hey Steve, any chance we’re close to an updated JSONQuery? It’s such a powerful tool

Hi Jon.

Yes — I am happy to say that we are getting close to the update being released. Kevin and I recently discussed this, and we are both hopeful for a release sometime in July.

Though I still have a small number of test cases to complete, most of the QA work is done, and I am presently putting some time into updating the demo file with new examples, and other small changes which I hope will make it easy to learn about the changes in the new version.