Last October I began a series on Outer Joins, which explored a number of different ways to display summarized information in a grid, and at the time I concluded that the “fastest” method was to leverage the FileMaker relational model. And it was plenty fast, locally… and not too bad on a LAN… and technically, it was the fastest method on a WAN but only because the other methods we looked at were even dog-slower than it was.

I like to test solutions on a WAN, even if they’re only going to be deployed on a LAN, because it’s a great way to uncover performance bottlenecks. But recently I needed to deploy a summarized grid on a WAN, and was incentivised to come up with something faster… and after a bit (well, okay, a lot) of trial and error settled on the approach we’re going to look at today. To cut to the chase, with a million records in the test file, the previous best grid rendering time of 11 seconds on a WAN has been reduced by a factor of 10, to just over one second.

So the goal is to quickly render a 7-day grid showing total daily sales per employee, and today we have two demo files, and two versions of each… one with 30,000 sales records, and one with 1 million sales records:

- Grid Display v1

- Grid Display v1 (with 1 million sales records)

- Grid Display v2

- Grid Display v2 (with 1 million sales records)

Grid Display v1 is pretty much the same as Demo 3 in Outer Joins in FileMaker 12, part 1, or more precisely, Demo 3d from the comments at the bottom of the article. It is well documented there, so I’m not going to dwell on it, but will mention in passing that the heavy lifting is done via table occurrences and predicates, i.e., a standard FileMaker relational approach to building a grid. [To be clear, v1 is slow on a WAN — I include it for purposes of comparison.]

Also in the above-mentioned comments section is a suggestion from Arild Schonberg to try looping through the sales records and pushing summarized employee daily totals into $$variables. This is known as the Fast Summary technique — thank you Mikhail Edoshin for documenting it, promoting it, and whatever part you played in its creation. It’s a powerful, game-changing magic spell to have in your bag of tricks, which I wrote about in detail in June 2011 (Fast Summaries), and is in a large part responsible for the high-octane performance of v2, not to mention the leanness of the Relationships Graph.

Normally when an FM12 graph is this sparse, there’s a good chance the solution is using ExecuteSQL, but I promise that no SQL was executed in any of today’s demos. Now as you might imagine, each Employee has a primary key…

…which of course corresponds to a foreign key in Sales:

…and the heavy lifting is done by the “increment date” script.

Thanks to Fast Summaries, the script skips lightly through a found set in Sales for the seven day range we want to see in the grid, generating and populating a repeating global variable like so: $$Ennnn[x], with the main portion representing the employee id, and the x representing a repetition number between 1 and 7, corresponding to the date column position on the grid.

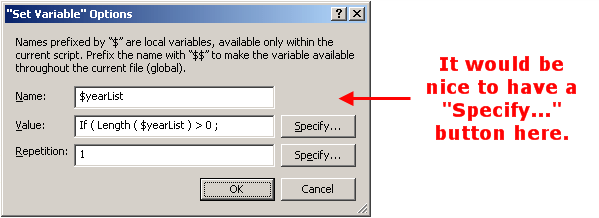

To accomplish this, the script uses a technique known as “dynamic variable instantiation“, which we first explored on this blog nearly two years ago:

Let’s look at a concrete example. Here are the seven days on the Week View:

Lester Downum, whose employee id is E0018, has sales on days 2, 3, and 5…

…and here are his variables in the Data Viewer.

These variables are rendered in the Week View by this repeating calculation:

(The ~wakeUp variable helps the summary repeater at the bottom of the layout stay responsive.)

And here’s the definition of that summary repeater:

A few other points of possible interest:

1. In all the demos, after the “increment date” script has executed, you can view $$elapsedMilliseconds in the Data Viewer, to see how long (in milliseconds) it took. Thank you Nick Orr for clueing me in to the existence of the Get(UTCmSecs) function, which a) makes this possible, and b) is undocumented.

2. In v2 I moved the date_g and date_r fields out of the Dev table and into Employees (to facilitate summary field responsiveness, as per the above ~wakeUp variable comment).

3. For readability, I declared these variables in the “increment date” script:

They are referred to in the step immediately below the two highlighted ones, and I suspected I could gain a slight performance boost by expanding that step from this…

…to this…

…and eliminating the two highlighted Set Variable steps above. But having tested both approaches multiple times, it doesn’t appear to make a bit of difference either way.

4. Variables can not only be dynamically instantiated; they can be dynamically evaporated as well. Since the number of columns in the week view is limited to seven, we know that’s the maximum rep # we’ll need to worry about… so like the valiant little tailor of legend, we can loop through the records in the Employees table, and kill seven at one blow, like so:

And I think that’s enough for today… at least from me. I am very interested to hear your thoughts about making this technique even faster, or if you see any problems with the v2 approach… I hope you’ll submit a comment and let me know.

Hi Kevin,

You’re welcome :) It was an original development, I discovered it in early 2000s when some guy asked in FMExperts if he could get a cross-tab report with a variable number of columns.

By the way, I tested a technically simpler approach to cross-tab reports with dynamically calculated repetitions (the modified file is here, the new layout is at the bottom of the list: https://dl.dropboxusercontent.com/u/9175169/Grid%20Display%20v2%20-%201M%20records.zip) but it turned out to be slower. On my computer the fast summaries method takes around 800 ms, while the dynamic approach ends up somewhere in 1200 ms; on WAN it can be even more noticeable, I guess. This is kind of expected, because fast summaries operate on stored data, while this approach uses unstored calculations. But it’s simpler and more natural for cross tab reports, so it may also be an option.

Kind regards,

Mikhail

Hi Mikhail, it’s good to know some of the history behind Fast Summaries… that technique is simply incredible, and I keep finding new uses for it. So thanks once again.

Also, thanks for that alternative week view. It’s not going to win any speed contests in the WAN realm unfortunately, but it’s nice to see another approach, and one I hadn’t considered.

Regards,

Kevin

Hi

this is amazing! It makes all so much sense :)

I use it now to create very complex reports where before I had all kind of unstored calcs, and all calling each other. Using globals and the getSummary () function creates such a nice cache for the calcs that the report is now generated in a blink of an eye!

Thank you both for sharing!

Hi, This is super. I have a problem.

I am trying to adapt this to my solution. Instead of getting $summaryAmt ( number) , I need to insert a text value. The Evaluate statement will fail if it is a text instead of number. For example, if I change to :

Evaluate (

“Let ( $$” & sales::id & “[” & $repNum & “] = ” & “Abc” & ” ; \”\” )”

)

It will not Evaluate.

Anywhere to get round this ?

Thank you

Make sure you escape the quotation marks around the Abc.

This works:

Evaluate ("Let ( $$" & sales::id & "[" & $repNum & "] = \"Abc\" ; $null )"

)

You can use

\"\"in place of $null if you prefer, but recently I’ve been using $null instead, and find it to be much easier on the eyes, not to mention the fingers.Hope this helps,

Kevin

Hello Kevin…

I have found this technique invaluable in creating dynamic reports relating to, for example, sales by product. Simply incredible performance.

Now I want to push it a little further and be able to accumulate values that come from different tables.

An example is to be able to create a summary of accounts where individual accounts are updated from records in multiple tables: sales, expenses, purchases, receipts, checks issued, etc.

So I effectively want to run the dynamic instantiation routine multiple times (once for each table) and create the variable and value if it does not exist from a previous iteration or, if it does, add the new value to what is currently contained in the variable.

My head feels as though it is attached to the body of the girl in ‘The Exorcist’..! So I thought I would come back to the source and seek your insight into a possible solution.

Many thanks for any suggestions…

Jeff

Hi Jeff,

Sounds like you might want to combine this technique or something similar with the virtual list technique. Search virtual list on this site and see if anything useful comes up. Otherwise, stay tuned, because I’m working on a series on virtual list reporting which will eventually see the light of day here.

Regards,

Kevin

Thanks for the fast response, Kevin.

I didn’t find anything that jumped out at me as a potential solution from the virtual list articles – but did find it heavy going so maybe I missed something.

Assuming I initially create a global variable for all possible values (in my example, all accounts and repetitions 1-12 for each of a range of selected months) then it would seem the real issue is a simple one – at least in theory: to increment the current value of the variable (initially zero) with a new amount each time that account is referenced when processing multiple tables.

But, while I understand how the variable is created, I simply can not visualize how its name can be ‘re-created’ in order to access and update its value without re-creating the variable and losing its current value.

If there is a method to accomplish this, I believe it will solve my problem…

But, in any event, I will look forward to your upcoming article on virtual lists. I am sure I join with many others in expressing my appreciation of you introducing some interesting – and highly usable – concepts in your articles.

Jeff