Recently there was a question about cleaning up a found set on one of the FileMaker discussion forums. When a question of this nature arises, it’s typically some variation on “How can I remove [or delete] duplicate entries?” But this was the opposite: For a given found set of customers, how can I omit those whose Zip codes only appear once in the found set?

In other words, keep the records whose Zips appear multiple times and banish the others.

Note that the challenge was starting from a subset of records. If the challenge had been for all records in the table, one could simply search on ! (find duplicate values) in the Zip code field. However, this trick won’t work when starting from a found set rather than all records. And constrain won’t help here because it doesn’t play nicely with the ! operator.

Update 9 May 2015: it turns out that there is a way to reliably use contrain with the ! operator from within a found set — see Ralph Learmont’s technique + great demo + explanation here — Successfully Find Duplicate Values Within A Set Of Records

Off the top of my head, I suggested…

Sort by Zip code, then loop through the found set from top to bottom… using GetNthRecord() test the current record’s Zip code against the previous record and also against the next record. If both tests are negative, omit, otherwise go to next record (and of course exit after last).

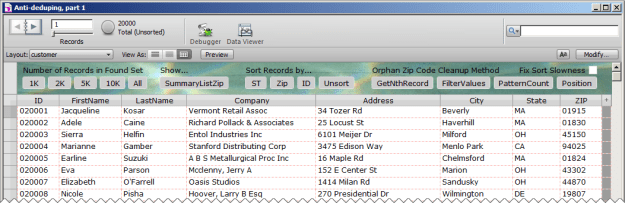

As it turned out, it was a one-time cleanup task, and my suggestion was good enough. But I had a nagging feeling there were better-performing ways to go about this, and today’s demo file, Anti-deduping, part 1, presents four different methods. I encourage you to download it, experiment, and add your own methods or variations… perhaps you’ll come up with a faster approach, in which case, needless to say, I hope you’ll post a comment at the end of this article.

The Four Approaches

- GetNthRecord

- ValueCount + FilterValues

- PatternCount

- Position

If your found set is small, say 1K or 2K records, it won’t matter much which method you use, but as the found set size increases, it becomes clear that each method is faster than its predecessor.

Also, when doing speed comparisons in FileMaker, one needs to consider whether caching is skewing the results. In this demo, I found the timings of the different methods to be consistent, regardless of which order I ran the tests in, or whether I quit and restarted FileMaker between each test.

Another consideration is whether the files are hosted (across a LAN or WAN) or local. I have found performance results to be fairly consistent regardless of the hosting setup… e.g., in my testing, the GetNthRecord approach takes 16 seconds to process 5K records across a WAN, and 15 seconds to do so locally. Unless otherwise specified, all times referred to in this article refer to tests conducted on a local file.

Basic Operation of the Demo

1. Generate a found set (there are 20K records in the demo, so that’s what you’ll get if you click “All”)

2. Optionally sort (more on this below)

3. Click one of these buttons

Okay, let’s look at each method.

GetNthRecord

This was my initial stab at solving the challenge… the “off the top of my head” suggestion described previously. Unfortunately it’s not going to win any performance prizes.

The Other Three Methods

Here is the basic approach used in the remaining three methods:

1. Use a summary list field, SummaryListZip…

…to generate a stack of Zip codes corresponding to the current found set and sort order (or lack thereof). Incidentally, you can easily view the contents of SummaryListZip by clicking here:

2. Push the contents of SummaryListZip into a variable, $$summaryList:

![]()

3. Loop through the found set and process the records:

Also, whereas the GetNthRecord method must be sorted on the Zip field to work, the remaining three methods do not require sorting to work… in fact as we’ll see in just a minute, they’re much faster when the found set is unsorted.

ValueCount + FilterValues

When processing 10K records, this method is twice as fast as GetNthRecord.

PatternCount

With 10K records this method is 5x faster than FilterValues.

Position

Here our logical test is looking for a second occurrence of a given Zip code. There might be more than two occurences but all we need to know is whether there’s a second one. Also note that for this test we “omit record” when the result is false, whereas in all the previous methods we did so when the result of the test was true.

And with 10K records, this method is twice as fast as PatternCount.

Sorted vs. Unsorted

The first method we looked at, GetNthRecord, only works if the found set is sorted. But the other three methods work whether the found set is sorted or not… except… things take considerably longer when the found set is sorted…

…and, the more “granular” the sort, the longer it takes. For example, on 5K records, here are timings for the Position method:

- Unsorted: 1 second

- State sort: 3 seconds

- Zip sort: 5 seconds

- ID sort: 11 seconds

Interestingly, records in the customer table are in the same order when unsorted, as they are when sorted (ascending) on ID (the primary key for the table). This raises the question: Does it take FileMaker longer to walk a sorted found set than an unsorted found set?

This question can be answered by running this script…

…on 20K records, either unsorted or sorted on the State, Zip or ID fields. In all cases the script takes either zero seconds or one second to complete.

Another question: Does it take longer for FileMaker to evaluate SummaryListZip and/or populate $$summaryList when the found set is sorted?

Stepping through the Position script with the debugger on, and with 20K records sorted by ID, this does not seem to be the case. The highlighted step completes almost instantly.

So what the heck is going on? It appears that when the found set is sorted, it takes FileMaker longer to compare the current record against the contents of $$summaryList, so each “If” test within the loop takes a bit longer than it would if the found set were unsorted. Exactly how much longer it takes depends on how granular the sort is.

Is There A Workaround?

Of course. You do know about FileMaker’s undocumented “Fix Sort Slowness” feature, don’t you?

Kidding. I’m just kidding. There is no such feature, but the effect can be achieved as follows:

- Unsort

- Process the found set

- Sort with “Perform without dialog checked” and no sort order specified

This will restore your previous sort order (thank you Ray Cologon for this very cool trick).

Well, that’s about it for today. In today’s demo we looked at techniques that work well when the field contents to be anti-deduped are of fixed length. In part 2 we’ll expand the techniques to work with variable-length field contents.

What about a self join on ZIP code and primary key not equal to itself?

Oh and a goto related records of course :-)

Relationships are not intrinsically found-set aware, so I don’t see how this would work starting from an arbitrary found set.

But if you’ve got a method, please add it to the demo and send me a copy.

Because goto related records can goto all related records in the current found set

I’m having trouble seeing how this would work. As per my previous request, please add your method to the demo, and send me a copy. Thank you.

Ahhh ooops maximum embarrassment factor this trick doesn’t work here because the join reaches outside the foundset. Like you said :-)

Export the found set, import to a temporary table, find with omit duplicates, GTRR. This takes about two seconds for 10,000 records and four or five seconds for 20,000 records.

“So what the heck is going on? It appears that when the found set is sorted, it takes FileMaker longer to compare the current record against the contents of $$summaryList, so each “If” test within the loop takes a bit longer than it would if the found set were unsorted.”

I don’t think that is what is going on.

My testing says that the culprit for the slowdown on the sorted list is your Omit Record step.

My guess is that the slowdown is because FileMaker Pro is preserving the sort order when the found set has changed. This shouldn’t be any work when omitting a record, but it could be that the same sort-preserving routine is called independent of how the found set has changed.

Hi Tom,

I think you just hit the nail squarely on the head.

Thanks,

Kevin

If you perform a find by state, There are less than 1K in found set you system does not work too good.

Hi Robert,

Could you give a more specific example?

Thanks,

Kevin

Looking at your question: “For a given found set of customers, how can I omit those whose Zip codes only appear once in the found set?” I’d do a search on the field (ZIP) that you want to de-dupe with an asterix ‘!’ FileMaker will keep all the duplicates, the unique ZIP codes will be 2777 records… Takes less than a second… In a script: do a search, afterwards do a constrain found set with an asterix in the ZIP field…

Hi Ruben,

As I stated in paragraph #3,

“Note that the challenge was starting from a subset of records. If the challenge had been for all records in the table, one could simply search on ! (find duplicate values) in the Zip code field. However, this trick won’t work when starting from a found set rather than all records. And constrain won’t help here because it doesn’t play nicely with the ! operator.”

I can’t see how your technique would respect the initial found set.

Regards,

Kevin

Hi Kevin,

I’m sorry, I totally overlooked that, rather dumb of me!

But why doesn’t FileMaker play nice with the ! operator? Shouldn’t it? I’ve tried it with sets of 100 records and it seems to work fine… Any ideas on that?

Regards,

Ruben

The ! operator searches for duplicate values. When used with the “Perform Find” option it searches across all records to find duplicates. When used with the constrain option, it searches for records which are duplicates of the values in the found set. In other words, it’s aggregating duplicates occurring outside the found set of records.

Take an example:

I’ve located a small found set of children’s pets and the pets are: macaw, turtle, goat, spider, snake and hen. If I want to search for all other macaws, turtles, goats, spiders and hens I can create five new find requests or I can use the ! operator and use the “Constrain Find” option.

To me that is the behaviour I’d expect if I used “Extend Find.” I think that that “Constrain Find” should search for duplicates within the found set and that “Extend Find” should provide the behaviour that we see in “Constrain Find” presently.

Thanks Malcolm! This clarifies my question, I’m going to try to work this out this week and will post results later…

My last comment about “Extend Find” isn’t appropriate. Extend Find always aggregates the existing found set with the results of the search. That shouldn’t change.

I haven’t tested “Constrain Find” with all the operators but its standard behaviour is to operate within the found set. However, when we use the duplicate operator that goes out the window. If I was the programmer I would be looking at a few options for a search on duplicates.

1. current behaviour: (a) extend found set by finding values within the found set that have duplicate values anywhere else in the table and including those duplicates and (b) constrain the found set by excluding values that are not duplicated within the table.

2. Constrain found set by finding only those values that occur more than once within the found set.

3. Constrain found set by finding values within the found set that have duplicate values anywhere else in the table.

If it was my code, I would be inclined to implement option #2. The current behaviour feels like it was developed to meet a particular use case rather than a general use case.